…of the fitness function in the EvoLisa project. In case you managed to miss it, EvoLisa is an already world famous project created by Roger Alsing earlier this week.

Continued from this post.

With just a few changes, we can actually make the original fitness function run 25 times faster. I’ll start by presenting the original code and then directly the improved version. After that I’ll discuss my reasoning behind each one of the changes.

Original code:

public static class FitnessCalculator

{

public static double GetDrawingFitness( DnaDrawing newDrawing, Color[,] sourceColors )

{

double error = 0;

using ( var b = new Bitmap( Tools.MaxWidth, Tools.MaxHeight, PixelFormat.Format24bppRgb ) )

using ( Graphics g = Graphics.FromImage( b ) )

{

Renderer.Render(newDrawing, g, 1);

BitmapData bmd1 = b.LockBits(

new Rectangle( 0, 0, Tools.MaxWidth, Tools.MaxHeight ),

ImageLockMode.ReadOnly,

PixelFormat.Format24bppRgb );

for ( int y = 0 ; y < Tools.MaxHeight ; y++ )

{

for ( int x = 0 ; x < Tools.MaxWidth ; x++ )

{

Color c1 = GetPixel( bmd1, x, y );

Color c2 = sourceColors[x, y];

double pixelError = GetColorFitness( c1, c2 );

error += pixelError;

}

}

b.UnlockBits( bmd1 );

}

return error;

}

private static unsafe Color GetPixel( BitmapData bmd, int x, int y )

{

byte* p = (byte*)bmd.Scan0 + y * bmd.Stride + 3 * x;

return Color.FromArgb( p[2], p[1], p[0] );

}

private static double GetColorFitness( Color c1, Color c2 )

{

double r = c1.R - c2.R;

double g = c1.G - c2.G;

double b = c1.B - c2.B;

return r * r + g * g + b * b;

}

}

[/sourcecode]

Optimized code, 25 times as fast:

[sourcecode language='csharp']

public struct Pixel

{

public byte B;

public byte G;

public byte R;

public byte A;

}

public class NewFitnessCalculator : IDisposable

{

private Bitmap _bmp;

private Graphics _g;

public NewFitnessCalculator()

{

_bmp = new Bitmap( Tools.MaxWidth, Tools.MaxHeight );

_g = Graphics.FromImage( _bmp );

}

public void Dispose()

{

_g.Dispose();

_bmp.Dispose();

}

public double GetDrawingFitness( DnaDrawing newDrawing, Pixel[] sourcePixels )

{

double error = 0;

Renderer.Render(newDrawing, g, 1);

BitmapData bd = _bmp.LockBits(

new Rectangle( 0, 0, Tools.MaxWidth, Tools.MaxHeight ),

ImageLockMode.ReadOnly,

PixelFormat.Format32bppArgb );

unchecked

{

unsafe

{

fixed ( Pixel* psourcePixels = sourcePixels )

{

Pixel* p1 = (Pixel*)bd.Scan0.ToPointer();

Pixel* p2 = psourcePixels;

for ( int i = sourcePixels.Length ; i > 0 ; i--, p1++, p2++ )

{

int r = p1->R - p2->R;

int g = p1->G - p2->G;

int b = p1->B - p2->B;

error += r * r + g * g + b * b;

}

}

}

}

_bmp.UnlockBits( bd );

return error;

}

}

First of all we notice that each time the fitness function is called, a new bitmap is constructed, used and then destroyed. This is fine for a function that seldom gets called. But for a function that is repeatedly called, we’ll be far better off if we reuse the same Bitmap and Graphics objects over and over.

Therefore I have changed the class from being static into one that muct be instantiated. Of course, that requires some minor changes to the consumer of this class, but in my opinion this will only be for the better. Although convenient, static methods (and/or singletons) are very hostile to unit testing and mocking, so I’m trying to move away from them anyway.

To my surprise, this first optimization attempt only buys us a few percent performance increase. I’m somewhat surprised at this, but anyway, it’s a start, and now it will get better. Read on.

So, once we’ve added a constructor to create the bitmap and graphics objects once and for all (as we’ll as making the class disposable so that the two GDI+ objects can be disposed) we move on to the real performance issues:

for ( int y = 0 ; y < Tools.MaxHeight ; y++ )

{

for ( int x = 0 ; x < Tools.MaxWidth ; x++ )

{

Color c1 = GetPixel( bmd1, x, y );

Color c2 = sourceColors[x, y];

double pixelError = GetColorFitness( c1, c2 );

error += pixelError;

}

}

[/sourcecode]

This code looks pretty innocent, eh? It is not.

Even for a moderately sized bitmap, say 1,000 by 1,000 pixels, the code in the inner loop is executed 1,000,000 times. Thats a pretty big number. This means that each tiny little "error", performance-wise, is multiplied by 1,000,000 so every little tiny tiny thing will count in the end.

So for example, just each method call will consume time compared to having the method's code inline within the loop. Above we find two method calls GetPixel and GetColorFitness which will be far better off moved inside the loop, but as I will end up explaining is that the worst performance hog here is really the innocent looking line "Color c2 = sourceColors[x, y];". Anyway, off we go:

[sourcecode language='csharp']

unchecked

{

unsafe

{

for ( int y = 0 ; y < Tools.MaxHeight ; y++ )

{

for ( int x = 0 ; x < Tools.MaxWidth ; x++ )

{

byte* p = (byte*)bmd1.Scan0 + y * bmd1.Stride + 3 * x;

Color c1 = Color.FromArgb( p[2], p[1], p[0] );

Color c2 = sourceColors[x, y];

int R = c1.R - c2.R;

int G = c1.G - c2.G;

int B = c1.B - c2.B;

error += R * R + G * G + B * B;

}

}

}

}

[/sourcecode]

The above changes, including changing the variables R, G & B from double into int will buy us approximately a 30% speed increase. Ain't much compared to 25 times but still we're moving on. Then we can look at the "Color c1" and notice that we can get rid of it completely by simply changing the inner code like so:

[sourcecode language='csharp']

byte* p = (byte*)bmd1.Scan0 + y * bmd1.Stride + 3 * x;

Color c2 = sourceColors[x, y];

int R = p[2] - c2.R;

int G = p[1] - c2.G;

int B = p[0] - c2.B;

error += R * R + G * G + B * B;

[/sourcecode]

Now we actually have code that executes TWICE as fast as our original code. And now we must turn our attention to the first two. The rest I don't think we can do much about.

Think about it. What we want to to is loop over each and every pixel in the image. Why then do we need to <em><strong>calculate </strong></em>the memory address for <em><strong>each pixel</strong></em> when we want to move to the <em><strong>next pixel</strong></em>? For each pixel we do completely unnecessary calculations. First "(byte*)bmd1.Scan0 + y * bmd1.Stride + 3 * x"; this contains four variables, two additions and two multiplications when really a single increment is all we need.

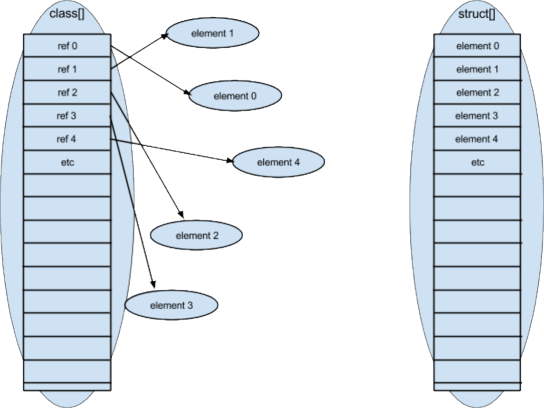

Then "sourceColors[x, y]". Fast enough and nothing we can improve here, right? No, no no, this is far WORSE! It looks completely harmless, but not only is a <strong>similar formula as the previous one</strong> taking place behind the scenes; for each pixel, the <em><strong>x and y parameters are being bounds checked</strong></em>, ensuring that we do not pass illegal values to the array-lookup!!!

So this innocent-looking expression will cause someting like this to happen somewhere around a million times for each fitness calculation:

// pseudo-code

if ( x < sourceColors.GetLowerBound( 0 ) || y < sourceColors.GetLowerBound( 1 ) || x > sourceColors.GetUpperBound( 0 ) || y > sourceColors.GetUpperBound( 1 ) )

throw new IndexOutOfRangeException( "(Index was outside the bounds of the array." );

Color c2 = *( &sourceColors + x * ( sourceColors.GetUpperBound( 1 ) + 1 ) + y );

Now we’re in for a little heavier refactoring. Unfortunately the sourcePixel matrix is laid out column-by-row instead of row-by-column which would have been better, so in order to solve this issue I’ll even change it into a vector of type “Pixel” instead. This requires change to the method signature and to the construction of the the matrix/vector itself of course, but once in place:

unchecked

{

unsafe

{

fixed ( Pixel* psourceColors = sourceColors )

{

Pixel* pc = psourceColors;

for ( int y = 0 ; y < Tools.MaxHeight ; y++ )

{

byte* p = (byte*)bmd1.Scan0 + y * bmd1.Stride;

for ( int x = 0 ; x < Tools.MaxWidth ; x++, p += 3, pc++ )

{

int R = p[2] - pc->R;

int G = p[1] - pc->G;

int B = p[0] - pc->B;

error += R * R + G * G + B * B;

}

}

}

}

}

we´re actually in for a performance improvement of 15 times!!!

Yeah, that’s actually how bad (performance-wise) the innocent looking line “Color c2 = sourceColors[x, y];” was. Bet some of you didn’t know that!!! 🙂

In order to change sourceColors from a matrix of Color into a vector of Pixel (declared as in the second code window above) I did this:

public static Pixel[] SetupSourceColorMatrix( Bitmap sourceImage )

{

if ( sourceImage == null )

throw new NotSupportedException( "A source image of Bitmap format must be provided" );

BitmapData bd = sourceImage.LockBits(

new Rectangle( 0, 0, Tools.MaxWidth, Tools.MaxHeight ),

ImageLockMode.ReadOnly,

PixelFormat.Format32bppArgb );

Pixel[] sourcePixels = new Pixel[Tools.MaxWidth * Tools.MaxHeight];

unsafe

{

fixed ( Pixel* psourcePixels = sourcePixels )

{

Pixel* pSrc = (Pixel*)bd.Scan0.ToPointer();

Pixel* pDst = psourcePixels;

for ( int i = sourcePixels.Length ; i > 0 ; i-- )

*( pDst++ ) = *( pSrc++ );

}

}

sourceImage.UnlockBits( bd );

return sourcePixels;

}

Probably a little overkill… but what the heck… Now I guess many people who are familiar with LockBits and direct pixel manipulation will cry out HEY YOU CAN’T DO THAT! YOU MUST TAKE THE “STRIDE” INTO ACCOUNT WHEN YOU MOVE TO A NEW SCAN LINE.

Well, yes… and no. Not when I use the PixelFormat.Format32bppArgb! Go figure! 🙂

So our new changes means that we process each ROW in the bitmap blindingly fast, as compared to the original version and combined with our caching of the bitmap we have gained a performance boost of 20 times!

Now for my final version I have rendered the drawing in PixelFormat.Format32bppArgb, which is the default format for bitmaps in GDI+. In that format each pixel will be exactly four bytes in size, which in turn means that GDI+ places no “gap” between the last pixel in one row and the first pixel in the next row and so we are actually able to treat the whole image as a single vector, processing it all in one go.

To conclude: in c# we can use unsafe code and pointer arithmetic to access an array far faster than the normal indexer, because we short-circuit the bounds checking. If we iterate over several consecutive elements in the array our gain is even larger, because we just increment the pointer instead of recalculating the memory address of the cells of the array over and over.

Normally we don’t want to bother with this because the gain of safe and managed code is bigger than the performance gain. But when it comes to image processing this perspective may not be as clear.

BTW, I wrote a post on a similar topic a few months ago: Soft Edged Images in GDI+.

EDIT: Continued here Ekeforshus

I’ve been playing around with image processing lately and since my last post about loading thumbnail images from files I couldn’t help myself from trying to roll my own “Web 2.0 reflection effect” directly in .NET 2.0 with no 3D support whatsoever. Actually, I think was more inspired by Windows Vista’s thumbnails (to the right) than the web.

I’ve been playing around with image processing lately and since my last post about loading thumbnail images from files I couldn’t help myself from trying to roll my own “Web 2.0 reflection effect” directly in .NET 2.0 with no 3D support whatsoever. Actually, I think was more inspired by Windows Vista’s thumbnails (to the right) than the web.